Table of Contents

Introduction

In my previous article “Connecting to Azure Data Lake Storage Gen2 from PowerShell using REST API – a step-by-step guide“, I showed and explained the connection using access keys.

As you probably know, access key grants a lot of privileges. In fact, your storage account key is similar to the root password for your storage account. As Microsoft says:

Always be careful to protect your account key. Avoid distributing it to other users, hard-coding it, or saving it anywhere in plaintext that is accessible to others. Regenerate your account key using the Azure portal if you believe it may have been compromised.

So what if you don’t want to use access keys at all? And what if you need to grant access only to particular folder? Fortunately, there is an alternative.

- You can create an application in the Azure Active Directory that you can use to grant ACL privileges in ADLS Gen2 to particular path.

- With ApplicationID and given secret, you can authorized your connection and get the bearer token…

- …and use this token in your REST connection to ADLS Gen2 endpoint 🙂

This time you don’t need to use any sophisticated algorithm to encrypt headers to make any connection 🙂 Unfortunately in case of data upload, you need to do few more steps to make it work. But don’t worry, it’s easy-peasy 😉

What you will learn

With this detailed step-by-step guide you will get to know how to:

- Add a new application registered in AAD, create a secret and how to get proper service principal id (because there are many IDs and it can be confusing…) [GO]

- How to get service principal id of that application if you don’t have permission to access Azure Active Directory in portal. [GO]

- How to grant this application particular ACL permission in ADLS Gen2 to allow access only to desired path. For example to read/write only in folder2 and not in folder1 when the path looks like this: /folder1/folder2/. No IAM (RBAC) roles will be used, pure ACL! [GO]

- How to access ADLS Gen2 using above application credentials via REST API. [GO]

- How to simply upload a file into ADLS Gen2 using REST API in three steps (CREATE, UPDATE, FLUSH) [GO]

Applications in Azure Active Directory

Let me start with an important information:

– In case you need to add an application, please ask your AAD administrator to create a new one. This is a quite common task, so the admin should already know how to do it 🙂 If not – just paste him link to this site, I’ll show how to do it.

– If you already have an application created for you, and you don’t have its service principal id, just head to the paragraph below and check how to get it manually. Just bear in mind, that this is a quite confusing part because there are many different ids available for application: ApplicationID (same as ClientID) Registered apps ObjectID, Enterprise App ObjectID (which is the service principal id), DirectoryID (also known as TenantID). As you can see it can give you a headache…

So even if you have some ID or application name given you by your colleague – please check it anyway!

Application names are not unique, IDs (guids) are everywhere in Azure. There are many ways to confuse us 🙂

Application is an object, that you can create with service principal and use to manage some identity features. Unfortunately it’s not so straightforward when it comes to describe what are the differences between apps and service principals. It always confuses people. But I think it would be easier for you to read at least two first paragraphs in this documentation. That should help you understand the relation and understand the reason why we create an app and still using service principal id, (and not application id) in permissions.

Creating application in Azure portal

We will create the app, then get service principal id.

1. Enter the Azure portal, go to “Azure Active Directory” and then “App registrations”. THIS LINK should redirect you exactly to the proper page.

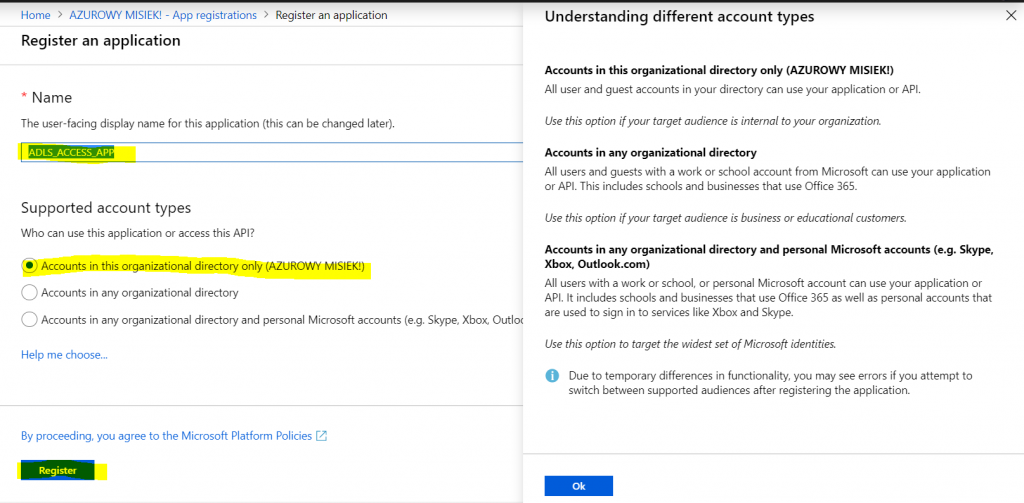

2. Click “New registration”, choose proper app type and provide a name. In my example it will be “ADLS_ACCESS_APP”

We will use regular app scoped to the AAD directory only. You can read about other types in screenshot below:

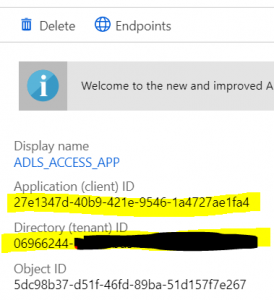

3. Save somewhere Directory (tenant) ID and Application (client) ID, they will be needed in our upload example for authorization. Remember, ApplicationID IS NOT your service principal id!

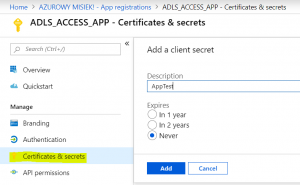

Adding a secret to your application

Just click “Certificates & secrets” in your App registrations, then select “+ New client secret”.

It will generate a secret for you. Don’t worry, my app does not exist anymore so don’t waste time using values to do the something evil xD

Copy the value now, it will be needed in our OAuth example.

How to get service principal id of the application using Azure portal

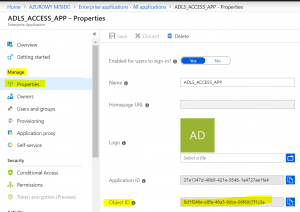

1. Now we need to go to Azure Active Directory pane once again and click this time “Enterprise applications”.

There should be your application available on the list. Click it. In my example it’s “ADLS_ACCESS_APP” application!

2. Click “Properties” from left pane in “Manage” section. As you can see the Application ID in form is the same. But the ObjectID is different. That’s because this is service principal id 🙂 Copy it and save somewhere as other values.

How to get service principal id of the application using PowerShell

So you don’t have permission to access Azure Active Directory?

If you have an application already prepared by someone and you want to get/check its service principal id, then you can get this id as long as you are member of that directory 🙂 This example uses good old AzureRM module (I still don’t like new Az…). If you don’t have this module, install it with the command below. Of course don’t forget to log in into proper Azure account!

|

1 2 |

Install-Module -Name AzureRM Connect-AzureRmAccount |

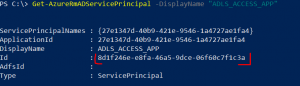

Search by Application Display Name (or App name)

|

1 |

Get-AzureRmADServicePrincipal -DisplayName "ADLS_ACCESS_APP" |

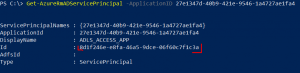

Search by Application ID

|

1 |

Get-AzureRmADServicePrincipal -ApplicationID 27e1347d-40b9-421e-9546-1a4727ae1fa4 |

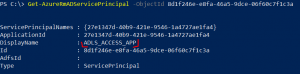

Check if given service principal id belongs to your application

You should always check if your admin gave you the proper id 🙂

|

1 |

Get-AzureRmADServicePrincipal -ObjectId 8d1f246e-e8fa-46a5-9dce-06f60c7f1c3a |

ACLs in ADLS Gen2

Once we will have proper service principal id, we can grant permissions in Azure Data Lake Stor[e|age] Gen2. You can read more about ACL in Microsoft Docs.

Just remember some important facts:

- There is a limit of maximum entries in Access Control List and it is currently 32. So once you will add our application, you will have 31 left. This is why they suggest to add you application into proper AAD group and then add the group as ACL entry. Makes sense, but choose wisely 🙂

- To allow access you need to have ACL permissions set somehow on every level of the given path. Starting with the filesystem itself, then on every folder and subfolder that user needs to access to.

- The basic permission to allow “access” is the “Execute” and remember: it will not let user to read or list anything! Just to “traverse” the path!

- So if you want to grant read access only to folder2 in a path: my-filesystem:/folder1/folder2/ you need to grant Execute permission on: my-filesystem, folder1, folder2 and Read on: folder2.

- To make it easier to propagate the permissions, ACLs are implementing something called “Default” permission. Setting this permission on folder1 will copy the permission to folder2 BUT ONLY if folder2 did not exist before applying those default permissions. So only to newly created files and folders. This is something definitely different than what everyone got used to in Windows. Permissions do not inherit! Defaults are copied only at creation time!

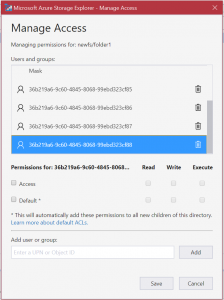

- Adding an application or adding an AAD group will not be presented in the ACL list with their user friendly names. Unfortunately they are not unique (this is a reason that they are giving) and you will always be struggling with Object IDs in Azure Storage Explorer or portal. That’s pain in the ass! Hope it will change someday 😐 Because well… It looks ugly and difficult to manage:

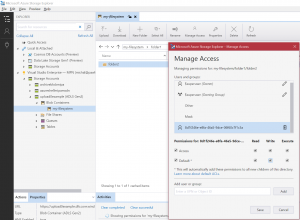

Applying ACL permissions using Azure Storage Explorer

Currently the “Storage Explorer (preview)” in portal does not have the possibility to manage ACL on filesystem.. Only on files. So we need to use Azure Storage Explorer, an application that you can download from here.

We will implement permissions according to the following assumptions:

- Path will be: my-filesystem:/folder1/folder2

- We want the user to be able to read and write only to folder2. So no R/W permissions on my-filesystem and folder1 and it means that he will not be able to even list the content of the filesystem nor folder1.

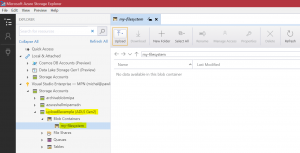

1. Open Azure Storage Explorer and go to your adls gen2 and target filesystem.

2. Click right mouse button on the filesystem. Choose “Manage Access…”

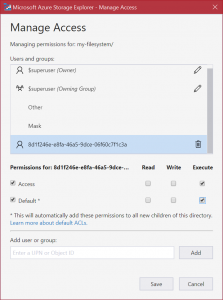

3. In our example we will use service principal id from the application, that was created at the beginning of this tutorial. Of course you can add ObjectID of the AAD group. You can also add user principal name (UPN), so regular user like user@yourdomain.com to allow access to this storage – for example to use Azure Databricks and Credential Passthrough feature 🙂

Add your service principal id and grant “Access” with “Execute” then “Default” also with “Execute”

The Default, as it sais, will automatically add this permission to all new children of this directory. Click “Save”.

4. Now we will create folder1, then folder2 inside folder1. Note that folder1 and folder2 already have our service principal id added and “Execute” permission was applied.

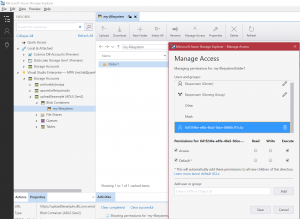

5. Click RMB on folder2, click LMB on “Manage access…” and change permissions of the application. Now we want to read and write everywhere in folder2, even in all future subdirectories.

So we will grant R/W/E on both “layers” : “Access” and “Default”.

UPLOADING A FILE

To upload a file we need all required parameters and several important steps:

- Get the bearer token from Azure OAuth 2.0 API

- Create an empty file on ADLS Gen2. This is required! Uploading a file can be done only as ‘append’ operation to already existing object.

- Upload the content using proper data stream and position offset (with single upload the position is zero)

- Flush the file on ADLS side to commit uploaded data with the offset (the position of the last byte, so the file size 🙂 )

Summarizing required parameters

So far we have all parameters of created app, all permissions are granted. We want to upload a file… so we need also a file and a target exact location 🙂

Let’s summarize all available details:

- ApplicationID: 27e1347d-40b9-421e-9546-1a4727ae1fa4

- Application Secret: 8h]D//c3FHp_FNF49]SygxL3_lm2O:G4

- Application Service Principal ID: 8d1f246e-e8fa-46a5-9dce-06f60c7f1c3a

- DirectoryID (also known as TenantID): [GUID but REDUCTED:P]

- Datalake Storage Account Name: upload0example

- Filesystem name: my-filesystem

- ADLS file path: folder1\folder2\file.txt

- Local file path: C:\tmp\My_50MB_file_that_i_want_to_upload.txt

Again, do not worry about secrets and id values ;] They are not valid anymore. I put them just to make the example as real as possible.

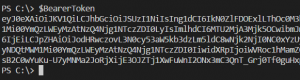

Get the bearer token

We need to get a token from oauth2 endpoint: https://login.microsoftonline.com/$DirectoryID/oauth2/v2.0/token

It requires some important variables. Set scope to https://storage.azure.com/.default and grant type to client_credentials . Use your ApplicationID and app secret and set token value to variable.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 |

function Get-OAuth2Token { <# .SYNOPSIS Get OAuth 2.0 bearer token from Azure. #> [CmdletBinding()] Param( [Parameter(Mandatory = $true, Position = 1)] [string] $ClientID , [Parameter(Mandatory = $True, Position = 2)] [string] $ClientSecret, [Parameter(Mandatory = $True, Position = 3)] [string] $Scope, [Parameter(Mandatory = $True, Position = 4)] [string] $GrantType, [Parameter(Mandatory = $True, Position = 5)] [string] $DirectoryID ) $formData = @{ client_id = $ClientId; client_secret = $ClientSecret; scope = $Scope; grant_type = $GrantType; } $uri = "https://login.microsoftonline.com/$DirectoryID/oauth2/v2.0/token" try { $response = Invoke-RestMethod -Uri $uri -Method Post -Body $formData -ContentType "application/x-www-form-urlencoded" $response.access_token } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription $false Throw $ErrorMessage + " " + $StatusDescription + " ClientID: $ClientID, Scope: $Scope, GrantType: $GrantType, DirectoryID: $DirectoryID" } } $ClientID = '27e1347d-40b9-421e-9546-1a4727ae1fa4' # ApplicationID $ClientSecret = '8h]D//c3FHp_FNF49]SygxL3_lm2O:G4' $Scope = 'https://storage.azure.com/.default' $GrantType = 'client_credentials' $DirectoryID = 'your_directory_id' #a.k.a. TenantID $BearerToken = Get-OAuth2Token -ClientID $ClientID -ClientSecret $ClientSecret -Scope $Scope -GrantType $GrantType -DirectoryID $DirectoryID |

The content of $BearerToken will look like this (reallly looooong value)

Create a file

We need to create the file before we will start uploading to it. Important facts:

- In my example there is additional conditional header: If-None-Match with a value * , that fails the creation if file already exist.

- Use $BearerToken from previous example to pass the token.

- You can always replace cmdlet Invoke-RestMethod with the other – Invoke-WebRequest to read all additional headers returned from REST API

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

function New-FileInADLS { <# .SYNOPSIS Create file in Data Lake Generation 2 using REST API and bearer token. #> [CmdletBinding()] Param( [Parameter(Mandatory = $true, Position = 1)] [string] $StorageAccountName, [Parameter(Mandatory = $True, Position = 2)] [string] $FilesystemName, [Parameter(Mandatory = $True, Position = 3)] [string] $BearerToken, [Parameter(Mandatory = $True, Position = 4)] [string] $ADLSFilePath ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/create $PathToCreate = $ADLSFilePath.trim("/") # remove all "//path" or "path/" $method = "PUT" $headers = @{ } $headers.Add("x-ms-version", "2018-11-09") $headers.Add("Authorization", "Bearer $BearerToken") $headers.Add("If-None-Match", "*") # To fail if the destination already exists, use a conditional request with If-None-Match: "*" $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + "/" + $PathToCreate + "?resource=file" try { Invoke-RestMethod -method $method -Uri $URI -Headers $headers # returns empty response } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription Throw $ErrorMessage + " " + $StatusDescription + " PathToCreate: $PathToCreate" } } $StorageAccountName = 'upload0example' $FilesystemName = 'my-filesystem' $ADLSFilePath = 'folder1\folder2\file.txt' New-FileInADLS -StorageAccountName $StorageAccountName -FilesystemName $FilesystemName -BearerToken $BearerToken -ADLSFilePath $ADLSFilePath |

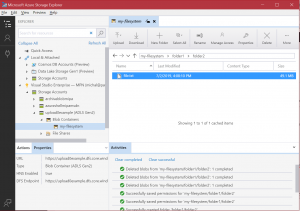

Our file now exists and does not have any size (yet 🙂 )

If you have permission errors during the file creation, please check if:

- your application is added into ACL as server principal id, not application id

- permissions on your destination folder are set to at least Read and Execute

- every upper folder and the filesystem needs to have “EXECUTE” permission granted to our application service principal id

So what if you want to create a file in a folder that you do not have access to?

|

1 2 |

$ADLSFilePath = 'folder1\file.txt' New-FileInADLS -StorageAccountName $StorageAccountName -FilesystemName $FilesystemName -BearerToken $BearerToken -ADLSFilePath $ADLSFilePath |

Bazinga!

Uploading the content of a file

Ok, the most important phase of our process. I need to mention other important information:

- Uploading a file does not make it uploaded yet! You need to flush the file (see next step)

- My example is really, really simple and is not bulletproof.

- I’m using single upload, my file is 50MB in size and I do not have any problems sending it and reading it after the upload.

- There is a huge topic regarding proper process usage and content describing, like acquiring locks (lease) before file sending or giving in headers Content-Length, Content-MD5, x-ms-content-type, x-ms-content-encoding, x-ms-content-language etc. This is not required, but it should be done as part of good practice!

- ADLS Gen2 REST API is capable of receiving your file in parallel connections, as small chunks! This is why it requires from us the position of a file.

- I’m not sure how big file can be in a single upload. So feel warned 🙂

- And by the way, what if your 50GB file upload will be interrupted by the connection error during last seconds of 1h uploading phase?

- In fact this is why azcopy (which is used by Azure Storage Explorer) uploads the data into ADLS Gen2 in chunks, parallelized in several connections. Check it. Find folder .azcopy in your user home directory (Windows: c:\Users\your_account\.azcopy, Linux: /home/your_account/.azcopy). Azcopy logs by default to it in really detailed manner 🙂

Ok, now the script. Use other variables from previous steps.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 |

function Import-FileToADLS { <# .SYNOPSIS Uplaoad file in Data Lake Generation 2 using REST API and bearer token. #> [CmdletBinding()] Param( [Parameter(Mandatory = $true, Position = 1)] [string] $StorageAccountName, [Parameter(Mandatory = $True, Position = 2)] [string] $FilesystemName, [Parameter(Mandatory = $True, Position = 3)] [string] $BearerToken, [Parameter(Mandatory = $True, Position = 4)] [string] $ADLSFilePath, [Parameter(Mandatory = $True, Position = 5)] [string] $FileToUpload ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/update $filebody = [System.IO.File]::ReadAllBytes($FileToUpload) $filesize = $filebody.Length $result = @{ } $result.Add("filesize", $filesize) $PathToUpload = $ADLSFilePath.trim("/") # remove all "//path" or "path/" $method = "PATCH" $headers = @{ } $headers.Add("x-ms-version", "2018-11-09") $headers.Add("Authorization", "Bearer $BearerToken") $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + "/" + $PathToUpload + "?action=append&position=0" try { $response = Invoke-WebRequest -method $method -Uri $URI -Headers $headers -Body $filebody # returns empty response $result.Add("response", $response) $result } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription Throw $ErrorMessage + " " + $StatusDescription + " ADLSFilePath: $PathToUpload, FileToUpload: $FileToUpload" } } $StorageAccountName = 'upload0example' $FilesystemName = 'my-filesystem' $FileToUpload = 'C:\tmp\My_50MB_file_that_i_want_to_upload.txt' $file_details = Import-FileToADLS -StorageAccountName $StorageAccountName -FilesystemName $FilesystemName -BearerToken $BearerToken -ADLSFilePath $ADLSFilePath -FileToUpload $FileToUpload |

File still does not have it’s size when you check it in Storage Explorer.

Currently the uploaded content exists only in a temporary cache of our ADLS service. If you want to finally finish the process – move to the next step.

We need to get the information about sended file size. Because it will be used as a final position to flush the content from the cache. That’s why I’m returning it into $file_details variable.

Flush the content of a file from cache to final destination

And now the final process:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 |

function Clear-AdlsFileCache { <# .SYNOPSIS Flush file in Data Lake Generation 2 using REST API and bearer token. #> [CmdletBinding()] Param( [Parameter(Mandatory = $true, Position = 1)] [string] $StorageAccountName, [Parameter(Mandatory = $True, Position = 2)] [string] $FilesystemName, [Parameter(Mandatory = $True, Position = 3)] [string] $BearerToken, [Parameter(Mandatory = $True, Position = 4)] [string] $ADLSFilePath, [Parameter(Mandatory = $True, Position = 5)] [string] $FlushPosition ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/update $PathToFlush = $ADLSFilePath.trim("/") # remove all "//path" or "path/" $method = "PATCH" $headers = @{ } $headers.Add("x-ms-version", "2018-11-09") $headers.Add("Authorization", "Bearer $BearerToken") $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + "/" + $PathToFlush + "?action=flush&position=$FlushPosition" # PATCH http://{accountName}.{dnsSuffix}/{filesystem}/{path}?action={action}&position={position} try { $response = Invoke-WebRequest -method $method -Uri $URI -Headers $headers # returns empty response $response } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription Throw $ErrorMessage + " " + $StatusDescription + " ADLSFilePath: $ADLSFilePath, FlushPosition: $FlushPosition" } } Clear-AdlsFileCache -StorageAccountName $StorageAccountName -FilesystemName $FilesystemName -BearerToken $BearerToken -ADLSFilePath $ADLSFilePath -FlushPosition $file_details.filesize |

And finally our upload process is finished, the file has proper size.

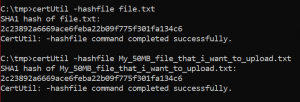

File verification

Just a little check.

Is this exactly what we have just tried to send? 🙂 Download the file and compare it with the origin using checksum:

|

1 2 |

certUtil -hashfile file.txt certUtil -hashfile My_50MB_file_that_i_want_to_upload.txt |

Success! Have a beer! 🙂

UPLOAD A FILE – The complete PowerShell script!

Everything from above examples placed in one script. Enjoy!

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 |

function Get-OAuth2Token { <# .SYNOPSIS Get OAuth 2.0 bearer token from Azure. #> [CmdletBinding()] Param( [Parameter(Mandatory = $true, Position = 1)] [string] $ClientID , [Parameter(Mandatory = $True, Position = 2)] [string] $ClientSecret, [Parameter(Mandatory = $True, Position = 3)] [string] $Scope, [Parameter(Mandatory = $True, Position = 4)] [string] $GrantType, [Parameter(Mandatory = $True, Position = 5)] [string] $DirectoryID ) $formData = @{ client_id = $ClientId; client_secret = $ClientSecret; scope = $Scope; grant_type = $GrantType; } $uri = "https://login.microsoftonline.com/$DirectoryID/oauth2/v2.0/token" try { $response = Invoke-RestMethod -Uri $uri -Method Post -Body $formData -ContentType "application/x-www-form-urlencoded" $response.access_token } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription $false Throw $ErrorMessage + " " + $StatusDescription + " ClientID: $ClientID, Scope: $Scope, GrantType: $GrantType, DirectoryID: $DirectoryID" } } function New-FileInADLS { <# .SYNOPSIS Create file in Data Lake Generation 2 using REST API and bearer token. #> [CmdletBinding()] Param( [Parameter(Mandatory = $true, Position = 1)] [string] $StorageAccountName, [Parameter(Mandatory = $True, Position = 2)] [string] $FilesystemName, [Parameter(Mandatory = $True, Position = 3)] [string] $BearerToken, [Parameter(Mandatory = $True, Position = 4)] [string] $ADLSFilePath ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/create $PathToCreate = $ADLSFilePath.trim("/") # remove all "//path" or "path/" $method = "PUT" $headers = @{ } $headers.Add("x-ms-version", "2018-11-09") $headers.Add("Authorization", "Bearer $BearerToken") $headers.Add("If-None-Match", "*") # To fail if the destination already exists, use a conditional request with If-None-Match: "*" $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + "/" + $PathToCreate + "?resource=file" try { Invoke-RestMethod -method $method -Uri $URI -Headers $headers # returns empty response } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription Throw $ErrorMessage + " " + $StatusDescription + " PathToCreate: $PathToCreate" } } function Import-FileToADLS { <# .SYNOPSIS Uplaoad file in Data Lake Generation 2 using REST API and bearer token. #> [CmdletBinding()] Param( [Parameter(Mandatory = $true, Position = 1)] [string] $StorageAccountName, [Parameter(Mandatory = $True, Position = 2)] [string] $FilesystemName, [Parameter(Mandatory = $True, Position = 3)] [string] $BearerToken, [Parameter(Mandatory = $True, Position = 4)] [string] $ADLSFilePath, [Parameter(Mandatory = $True, Position = 5)] [string] $FileToUpload ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/update $filebody = [System.IO.File]::ReadAllBytes($FileToUpload) $filesize = $filebody.Length $result = @{ } $result.Add("filesize", $filesize) $PathToUpload = $ADLSFilePath.trim("/") # remove all "//path" or "path/" $method = "PATCH" $headers = @{ } $headers.Add("x-ms-version", "2018-11-09") $headers.Add("Authorization", "Bearer $BearerToken") $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + "/" + $PathToUpload + "?action=append&position=0" try { $response = Invoke-WebRequest -method $method -Uri $URI -Headers $headers -Body $filebody # returns empty response $result.Add("response", $response) $result } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription Throw $ErrorMessage + " " + $StatusDescription + " ADLSFilePath: $PathToUpload, FileToUpload: $FileToUpload" } } function Clear-AdlsFileCache { <# .SYNOPSIS Flush file in Data Lake Generation 2 using REST API and bearer token. #> [CmdletBinding()] Param( [Parameter(Mandatory = $true, Position = 1)] [string] $StorageAccountName, [Parameter(Mandatory = $True, Position = 2)] [string] $FilesystemName, [Parameter(Mandatory = $True, Position = 3)] [string] $BearerToken, [Parameter(Mandatory = $True, Position = 4)] [string] $ADLSFilePath, [Parameter(Mandatory = $True, Position = 5)] [string] $FlushPosition ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/update $PathToFlush = $ADLSFilePath.trim("/") # remove all "//path" or "path/" $method = "PATCH" $headers = @{ } $headers.Add("x-ms-version", "2018-11-09") $headers.Add("Authorization", "Bearer $BearerToken") $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + "/" + $PathToFlush + "?action=flush&position=$FlushPosition" # PATCH http://{accountName}.{dnsSuffix}/{filesystem}/{path}?action={action}&position={position} try { $response = Invoke-WebRequest -method $method -Uri $URI -Headers $headers # returns empty response $response } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription Throw $ErrorMessage + " " + $StatusDescription + " ADLSFilePath: $ADLSFilePath, FlushPosition: $FlushPosition" } } # the code $StorageAccountName = 'upload0example' $FilesystemName = 'my-filesystem' $ADLSFilePath = 'folder1\folder2\file.txt' $FileToUpload = 'C:\tmp\My_50MB_file_that_i_want_to_upload.txt' $ClientID = '27e1347d-40b9-421e-9546-1a4727ae1fa4' # ApplicationID $ClientSecret = '8h]D//c3FHp_FNF49]SygxL3_lm2O:G4' $Scope = 'https://storage.azure.com/.default' $GrantType = 'client_credentials' $DirectoryID = 'your_directory_id' #a.k.a. TenantID $BearerToken = Get-OAuth2Token -ClientID $ClientID -ClientSecret $ClientSecret -Scope $Scope -GrantType $GrantType -DirectoryID $DirectoryID New-FileInADLS -StorageAccountName $StorageAccountName -FilesystemName $FilesystemName -BearerToken $BearerToken -ADLSFilePath $ADLSFilePath $file_details = Import-FileToADLS -StorageAccountName $StorageAccountName -FilesystemName $FilesystemName -BearerToken $BearerToken -ADLSFilePath $ADLSFilePath -FileToUpload $FileToUpload Clear-AdlsFileCache -StorageAccountName $StorageAccountName -FilesystemName $FilesystemName -BearerToken $BearerToken -ADLSFilePath $ADLSFilePath -FlushPosition $file_details.filesize |

Hi Michal,

Thanks for the wonderful article.

Could you please advise how to create/upload file using shared key authentication method.

I am getting 403 forbidden while trying to create file using Shared Key authentication method.

Hi RazaWilliams.

Could you paste your code, please?

You can use this portal to make it easy to read: https://paste.ofcode.org/

I did not try to upload the data using access key but of course, I can check if everything is correct accordingly to my previous article: http://sql.pawlikowski.pro/2019/03/10/connecting-to-azure-data-lake-storage-gen2-from-powershell-using-rest-api-a-step-by-step-guide/

Hi Michał Pawlikowski ,

ADLSGen2 Storage Filesystem :

I have been trying to set ACL permission on root directory inside the filesystem/container and finding it little hard to fix it. I’m not sure where am missing to include the root directory as well to apply the permission instead to traverse only through sub dir/folder.

Not sure if im missing something in URI 🙁

The script gave by MS apply the ACL permission on all folders inside the root directory, however need to apply permission on root directory as well.

For some security reason i couldn’t share the script here.

Please advise or help.

Hi Raj.

I’m sorry but I do not know what you are refering to. Could you please use pasting code portal, like this one : https://paste.ofcode.org/ to present your case?

Also I do not know what exactly you are using from microsoft. Since the release of the multi protocol feature, there is a lot of stuff that now actually works (after a year!) as it worked for blobs since the beginning of Azure storage 🙂 My post is actually refering to the native adls gen2 rest api and now you can do more with old tools that are using blob rest api only.

Thanks for your response Michał .I have given the pseudo code. Please acknowledge if you were able to look at it. At line 17 i’m providing the root directory, however the ACL permission applies on the folders that are inside the root directory. I need them to applied on the root directory as well.

However if i give $null, ACL permission applies on all the root directory which is not my requirement.

Also how to apply ACL permission on a specific folder ? Please advise

After pasting it to https://paste.ofcode.org/ you need to copy the url of the page and paste it here, like this one: https://paste.ofcode.org/r249zXX7Y7HEA8n3qLGqB3

Here you go : https://paste.ofcode.org/38vF6iYvn8nSKnufEEKVTA

Hmm, ok, so you are using psh for the logic and curl for triggering api.

You know, I’ve created similar example in my previous post:

http://sql.pawlikowski.pro/2019/03/10/connecting-to-azure-data-lake-storage-gen2-from-powershell-using-rest-api-a-step-by-step-guide/#Set_permissions_on_filesystem_or_path

It is using access keys, so it looks kinda nasty, anyway you can see, that for root of the filesystem (so it is the same as setting permissions ON the filesystem) I’m using only additional “/” in path by setting $Path = “/” and the url looks like this:

$URI = “https://$StorageAccountName.dfs.core.windows.net/” + $FilesystemName + “/” + $Path + “?action=setAccessControl”

This definitely works in one of my projects and I did not have any problems with it. So try to set RootDir to “/” if you want to start with the filesystem and skip it if your root is something nested relative to root. BTW I’ve found it in the past by reverse engineering requests send from Azure Storage Explorer, they are sending double “/” for permissions on the filesystem (also known as container in blob world 😉 )

looks like this only works with file sizes upto 100MB.. do you have a script that handles more than that? else its fine, i will go ahead and modify to include higher sizes

Hi Ganesh,

unfortunately I do not have such script.

In that case you need to implement incremental load, same as you would do for parallel upload scenario, which will use “position” and “action=flush”. See docs: https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/update#uri-parameters

Are you getting “413 Request Entity Too Large, RequestBodyTooLarge, “The request body is too large and exceeds the maximum permissible limit.” ? In that case I should edit my article and mention that there is 100MB limit in body for API request…

Thanks.

Yes sir.. I am getting that error message when going beyond 100MB

He Michał!

I ran into the problem when I tried to overwrite a file in the 2nd generation data lake.

I export data to a zip archive daily.

The name of the * .zip file is always the same – “MyData.zip”, but the file size is different.

These are my next steps:

1. PUT https:// … \MyData.zip?resource=file

response 409 (The specified path already exists). I think it’s Ok.

2. PATCH https:// … \MyData.zip?action=append&position=0

response 202 (Accepted).I think it’s Ok too – I’m specifying that the position should be set to 0

3. PATCH https:// … \MyData.zip?action=flush&position=366

where 366 is the actual number of bytes in my new file.

Response 400 (The uploaded data is not contiguous or the position query parameter..)

And in the respond header i see “Content-Length: 280”.

Could you please advice smth?

Hi Alex.

From the rest api docs you can tell, that the path update (executed with PATCH method) can only be done in append mode, which is in fact not dedicated for overwriting a file.

Zip file is a binary object, and it will always be different every time you generate it, starting from the first byte.

So it means you cannot leverage any append mode. The file needs to be deleted first before you will upload its updated version.

He Michał!

Thanks for answering me!

So it turns out that there are no methods to organize cyclic rewriting?

I read the docs, but maybe I am missing something..

Well, as for as I know – not form ADLS REST API. You need to delete the file and upoad it again in separate calls.

I can see, that BLOB API handles this differently.

If you check the docs:

https://docs.microsoft.com/en-us/rest/api/storageservices/put-blob

you will find this:

“The Put Blob operation creates a new block, page, or append blob, or updates the content of an existing block blob. (…) the content of the existing blob is overwritten with the content of the new blob”

Since BLOB REST API is now suppoted in ADLS (and it was not supported at the time of writing this article), you can try to invoke BLOB commans against data lake. But this requires you to call a different endpoint with probably different set of parameters

Thanks a lot!

Michal,

I am trying to troubleshoot a (404) Not Found. The specified filesystem does not exist. error. I am also new to adls so I am sure that this is something simple. What are some tips I can use to make sure I have the script and my storage account set up correctly?

Hi Jim.

Hmm, interesting. I would say: “try to print out all variables with the path and check if everything is in place.”

But in the meantime: “check also google if this error is not a part of a bigger conspiracy” 😉 And you know what? Looks like it is!

First, official documentation:

https://docs.microsoft.com/en-us/azure/databricks/data/data-sources/azure/adls-gen2/azure-datalake-gen2-faqs-issues#when-i-accessed-an-azure-data-lake-storage-gen2-account-with-the-hierarchical-namespace-enabled-i-experienced-a-javaiofilenotfoundexception-error-and-the-error-message-includes-filesystemnotfound

Then Clouderas:

https://docs.cloudera.com/HDPDocuments/Cloudbreak/Cloudbreak-2.9.0/cloud-data-access/content/cb_configuring-access-to-adls2-test2.html

From my point of view, it doesn’t matter if this error is for java or any other language (like for us, PowerShell and REST). It means that you can’t create blob containers using Portal on HNS enabled storage accounts (and HNS means Hierarchical Name Spaces which also measn – Azure Data Lake 😉 , it’s just a Storage Account with enabled Data Lake capabilities). Which is, btw, strange, but this could be your case… ? I did not try this as I always create filesystems (which now can be named containers becasue of storage blob api anteroperability…) using scripts or from Azure Storage Explorer:

OK, so first try to create a filesystem, instead of a container. You can do this by using storage explorer viewer (in portal) or by using Azure Storage Explorer. Then check if this works for you in the same script, if it does – well, then the reason is like from the article.. But deleting storage container seems a little bit.. scary operation… I’m not sure if this will be a good option, but it depends on your case and if you can do this (like you work on a test environment or smthng)

THen please give a feedback how it worked for you 🙂 Regards!

Hi Michal,

Firstly, great article! I am able to use above example and test. Works nicely!

I have another question on similar lines- How can I use client certificate to authenticate instead of Adding a client secret? Would you have an example for me? I have tried myself, but heading nowhere.

Could you may be help here?

Thank you so much!

Ashwin

Hi Michal,

Has you or anyone faced error 407. below is the error. Any suggestion please?

PS P:\> $file_details = Import-FileToADLS -StorageAccountName $StorageAccountName -FilesystemName $FilesystemName -BearerToken $BearerToken -ADLSFilePath $ADLSFilePath -FileToUpload $FileToUpload

The remote server returned an error: (407) Proxy Authentication Required. authenticationrequired ADLSFilePath: dir1/rmad_file.txt,

FileToUpload: P:\Project documents\Azure Work\StorageAccountUploadTest\StorageUploadTest.txt

At line:120 char:9

+ Throw $ErrorMessage + ” ” + $StatusDescription + ” ADLSFilePa …

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : OperationStopped: (The remote serv…eUploadTest.txt:String) [], RuntimeException

+ FullyQualifiedErrorId : The remote server returned an error: (407) Proxy Authentication Required. authenticationrequired ADLSF

ilePath: dir1/rmad_file.txt, FileToUpload: P:\Project documents\Azure Work\StorageAccountUploadTest\StorageUploadTest.txt

Hi Guarav.

Looks like an ordinary issue with proxy. Do you have proxy configured on your machine?

If so, please turn it off. OR if your company requires you to use some kind of proxy, you need to be properly signed in there. This error is not rleated to the Azure itself, but to your connectivity.

First hit with google:

https://kb.netwrix.com/1219

Thanks for sharing.

In case it is useful to anybody, I modified the Import function to support large files, here is my implementation:

function Import-FileToADLS {

[CmdletBinding()]

Param(

[Parameter(Mandatory = $true, Position = 1)] [string] $StorageAccountName,

[Parameter(Mandatory = $True, Position = 2)] [string] $FilesystemName,

[Parameter(Mandatory = $True, Position = 3)] [string] $BearerToken,

[Parameter(Mandatory = $True, Position = 4)] [string] $ADLSFilePath,

[Parameter(Mandatory = $True, Position = 5)] [string] $FileToUpload

)

# Rest documentation:

# https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/update

$result = @{ }

$filesize = (Get-Item $FileToUpload).length

if ($filesize -gt 0kb)

{

$PathToUpload = $ADLSFilePath.trim(“/”) # remove all “//path” or “path/”

$method = “PATCH”

$headers = @{ }

$headers.Add(“x-ms-version”, “2018-11-09”)

$headers.Add(“Authorization”, “Bearer $BearerToken”)

$offset = 0

$chunkSize = 256kb

$chunk = New-Object byte[] $chunkSize

try

{

$fileStream = [System.IO.File]::OpenRead($FileToUpload)

while ($bytesRead = $fileStream.Read($chunk, 0, $chunkSize))

{

$URI = “https://$StorageAccountName.dfs.core.windows.net/” + $FilesystemName + “/” + $PathToUpload + “?action=append&position=$offset”

if ($bytesRead -eq $chunkSize)

{

$body = $chunk

}

else

{

$body = New-Object byte[] $bytesRead

for($i=0; $i -lt $bytesRead; $i++)

{

$body[$i] = $chunk[$i]

}

}

try {

$response = Invoke-WebRequest -method $method -Uri $URI -Headers $headers -Body $body

}

catch {

$ErrorMessage = $_.Exception.Message

$StatusDescription = $_.Exception.Response.StatusDescription

Throw $ErrorMessage + ” ” + $StatusDescription + ” ADLSFilePath: $PathToUpload, FileToUpload: $FileToUpload”

}

$offset += $bytesRead

}

}

finally

{

$fileStream.Close()

}

}

$result.Add(“filesize”, $filesize)

$result

}

Please find a modified version of the script which adds support for large files.

https://gist.github.com/JCallico/81ee415b7fa25e88e359d8fc852a6a92