This article describes how to connect using the access key. I also prepared another article showing how to connect to ADLS Gen2 using OAuth bearer token and upload a file. It is definitely easier (no canonical headers and encoding), although it requires the application account. You can read more here: http://sql.pawlikowski.pro/2019/07/02/uploading-file-to-azure-data-lake-storage-gen2-from-powershell-using-oauth-2-0-bearer-token-and-acls/

Table of Contents

Introduction

Azure Data Lake Storage Generation 2 was introduced in the middle of 2018. With new features like hierarchical namespaces and Azure Blob Storage integration, this was something better, faster, cheaper (blah, blah, blah!) compared to its first version – Gen1.

Since then, there has been enough time to prepare the appropriate libraries, thanks to which we could connect to our data lake.

Is that right? Well, not really…

Ok, it’s August 2019 and something finally has changed 🙂

Ok, it’s February 2020 and finally, MPA is in GA!

Microsoft introduced a public preview of Multi Protocol Access (MPA) which enables BLOB API on hierarchical namespaces.

Currently it works only in West US 2 and West Central US regions works in all regions and of course has some limitations (click here).

You can read about the details below:

https://docs.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-multi-protocol-access

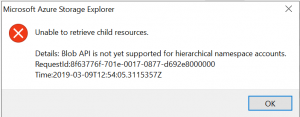

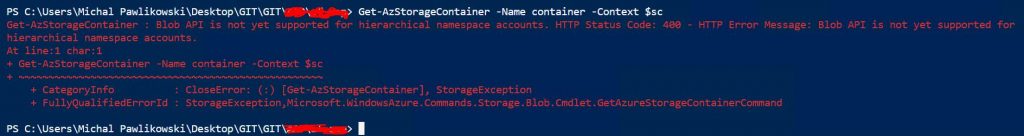

ADLS Gen2 is globally available since 7th of February 2019. Thirty-two days later, there is still no support for the BLOB API, and it means no support for az storage cli or REST API. So you gonna have problems here:

“Blob API is not yet supported for hierarchical namespace accounts”

Are you joking?

And good luck with searching for a proper module in PS Gallery. Of course, this will change in the future.

As a matter of fact – I hope, that this article will help someone to write it 🙂 (yeah, I’m too lazy or too busy or too stupid to do it myself 😛 )

So for now, there is only one way to connect to Azure Data Lake Storage Gen2… Using native REST API calls. And it’s a hell of the job to understand the specification and make it work in the code. But it’s still not rocket science 😛

And by the way. I’m just a pure mssql server boy, I need no sympathy 😛 So let me put it in that way: web development, APIs, RESTs and all of that crap are not in my field of interest. But sometimes you have to be your own hero to save the world… I needed this functionality in the project, I read all the documentation and a few blog posts. However, no source has presented how to do it for ADLS Gen2 😮

So now I’ll try to fill this gap and explain this as far as I understood it, but not from the perspective of a professional front-end/back-end developer, which I am definitely not!

ADLS Gen REST calls in action – sniffing with Azure Storage Explorer

I wrote an entire article about How to sniff ADLS Gen2 storage REST API calls to Azure using Azure Storage Explorer.

Go there, read it, try it for yourself. If you need to implement it in your code just look at how they are doing it.

Understanding Data Lake REST calls

If you want to talk to ADLS Gen2 endpoint in his language, you have to learn “two dialects” 😛

- REST specification dedicated only for Azure Data Lake Storage Gen2 (URL with proper endpoints and parameters), documented HERE.

- GENERAL specification for authenticating connections to any Azure service endpoint using “Shared key”, documented HERE.

Knowing the first one gives you the ability to invoke proper commands with proper parameters on ADLS. Just like in console, mkdir or ls.

Knowing the second – just how to encrypt those commands. Bear in mind that no bearer token (see the details in a green box at the beginning of this page…), no passwords or keys are used for communication! Basically, you prepare your request as a bunch of strictly specified headers with their proper parameters (concatenated and separated with new line sign) and then you “simply” ENCRYPT this one huge string with your Shared Access Key taken from ADLS portal (or script).

Then you just send your request in pure http(s) connection with one additional header called “Authorization” which will store this encrypted string, along with your all headers!

You may ask, whyyyyy, why we have to implement such a logic?

The answer is easy. Just because they say so 😀

The answer is easy. Just because they say so 😀

But to be honest, this makes total sense.

ADLS endpoint will gonna receive your request. Then it will also ENCRYPT it using our secret Shared Key (which once again, wasn’t transferred in the communication anywhere, it’s a secret!). After encryption, it will compare the result to your “Authorization” header. If they will be the same it means two things:

- you are the master of disaster, owner of the secret key that nobody else could use to encrypt the message for ADLS

- there was no in-the-middle injection of any malicious code and that’s the main reason why this is so tortuous…

How to encrypt – it is just a different kettle of fish… Because you have to use keyed-Hash Message Authentication Code (HMAC) prepared with SHA256 algorithm converted to Base64 value using a hash table generated from your ADLS shared access key (which, by the way, is the Base64 generated string :D) But no worries! It’s not so complicated as it sounds, and we have functions in PowerShell that can do that for us.

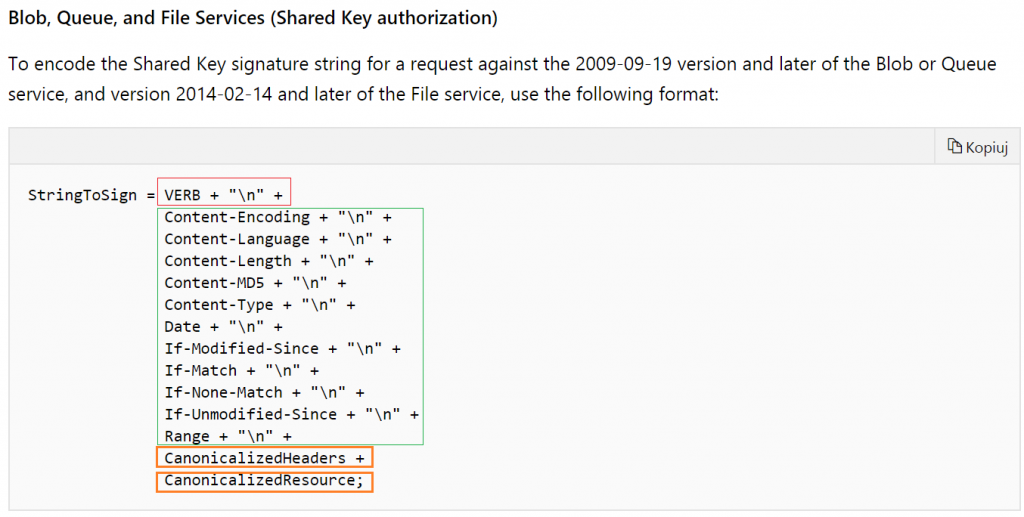

Authorization Header specification

Ok, let’s look closer to the Azure auth rest specification here: https://docs.microsoft.com/en-us/rest/api/storageservices/authorize-with-shared-key

We are implementing an ADLS gen2 file system request, which belongs to “Blob, Queue, and File Services” header. If you are going to do this for Table Service or if you would like to implement it in “light” version please look for a proper paragraph in docs.

Preparing Signature String

Quick screen from docs:

We can divide it into 4 important sections.

- VERB – which is the kind of HTTP operation that we are gonna invoke in REST (in.ex. GET will be used to list files, PUT for creating directories or uploading content to files or PATCH for changing permissions)

- Fixed Position Header Values – which must occur in every call to REST, obligatory for sending BUT you can leave them as an empty string (just “” with an obligatory end of line sign)

- CanonicalizedHeaders – what should be there is also specified in the documentation. Basically, you have to put all available “x-ms-” headers here. And this is important – in lexicographical order by header name! For example: "x-ms-date:$date`nx-ms-version:2018-11-09`n"

- CanonicalizedResource – also specified in docs. Here come all lowercase parameters that you should pass to adls endpoint according to its own specification. They should be ordered exactly the same as it is in the invoked URI. So if you want to list your files recursively in root directory invoking endpoint at https://[StorageAccountName].dfs.core.windows.net/[FilesystemName]?recursive=true&resource=filesystem" you need to pass them to the header just like this: "/[StorageAccountName]/[FilesystemName]`nnrecursive:true`nresource:filesystem"

Bear in mind that every value HAS TO BE ended with new line sign, except for the last, that will appear in the canonicalized resource section. They also need to be provided as lowercase values!

In examples above, I’m using `n which in PowerShell is just a replacement for \n

So if I want to list files in the root directory first I declare parameters:

|

1 2 3 4 5 6 |

[CmdletBinding()] Param( [Parameter(Mandatory=$true,Position=1)] [string] $StorageAccountName, [Parameter(Mandatory=$True,Position=2)] [string] $FilesystemName, [Parameter(Mandatory=$True,Position=2)] [string] $AccessKey ) |

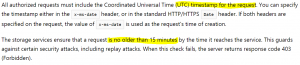

Then I need to prepare a date, accordingly to specification. And watch out! Read really carefully this “Specifying the Date Header” section in the docs! It’s really important to understand how Date headers work! The most important part is:

Ok, creating it in PowerShell, also I’m preparing new line and my method as variables ready to be used later:

|

1 2 3 4 |

$date = [System.DateTime]::UtcNow.ToString("R") # ex: Sun, 10 Mar 2019 11:50:10 GMT $n = "`n" $method = "GET" |

So what about fixed headers? For listing files in ADLS and with defined x-ms-date I can leave them all empty. It would be different if you would like to implement PUT and upload the content to Azure. Then you should use at least Content-Length and Content-Type . For now it looks like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

$stringToSign = "$method$n" #VERB $stringToSign += "$n" # Content-Encoding + "\n" + $stringToSign += "$n" # Content-Language + "\n" + $stringToSign += "$n" # Content-Length + "\n" + $stringToSign += "$n" # Content-MD5 + "\n" + $stringToSign += "$n" # Content-Type + "\n" + $stringToSign += "$n" # Date + "\n" + $stringToSign += "$n" # If-Modified-Since + "\n" + $stringToSign += "$n" # If-Match + "\n" + $stringToSign += "$n" # If-None-Match + "\n" + $stringToSign += "$n" # If-Unmodified-Since + "\n" + $stringToSign += "$n" # Range + "\n" + $stringToSign += <# SECTION: CanonicalizedHeaders + "\n" #> "x-ms-date:$date" + $n + "x-ms-version:2018-11-09" + $n # <# SECTION: CanonicalizedHeaders + "\n" #> $stringToSign += <# SECTION: CanonicalizedResource + "\n" #> "/$StorageAccountName/$FilesystemName" + $n + "recursive:true" + $n + "resource:filesystem"# <# SECTION: CanonicalizedResource + "\n" #> |

- Implementing file upload is much more complex than only listing them. According to the docs, you have to first CREATE file then UPLOAD a content to it (and it looks like you can do this also in parallel 😀 Happy threading/forking!)

- Don’t forget about the required headers. UPDATE gonna need more than listing.

- You can also change permissions here, see setAccessControl

- There is also a huuuge topic about conditional headers. Don’t miss it!

Encrypting Signature String

Let’s have fun 🙂 Part of the credits goes to another blog with an example of a connection to storage tables.

First, we have to prepare an Array of Base64 numbers converted from our Shared Access Keys:

|

1 |

$sharedKey = [System.Convert]::FromBase64String($AccessKey) |

Then we create HMAC SHA256 object, we gonna fill it with our numbers from access key.

|

1 2 |

$hasher = New-Object System.Security.Cryptography.HMACSHA256 $hasher.Key = $sharedKey |

Now we can actually encrypt our string using ComputeHash function of our HMAC SHA256 object. But before encryption, we have to convert our string into the byte stream.

As specification requires it from us, we have to encode everything once again into Base64.

|

1 |

$signedSignature = [System.Convert]::ToBase64String($hasher.ComputeHash([System.Text.Encoding]::UTF8.GetBytes($stringToSign))) |

And use it as auth header, along with storage account name before it.

Also here we have to add ordinary headers with date of sending the request and API version.

Remember to use proper version (a date) which you can find in ADLS Gen2 REST API spec:

|

1 2 3 4 5 |

$authHeader = "SharedKey ${StorageAccountName}:$signedSignature" $headers = @{"x-ms-date"=$date} $headers.Add("x-ms-version","2018-11-09") $headers.Add("Authorization",$authHeader) |

That’s it!

Now you can invoke request to your endpoint:

|

1 2 3 |

$URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + "?recursive=true&resource=filesystem" $result = Invoke-RestMethod -method GET -Uri $URI -Headers $headers |

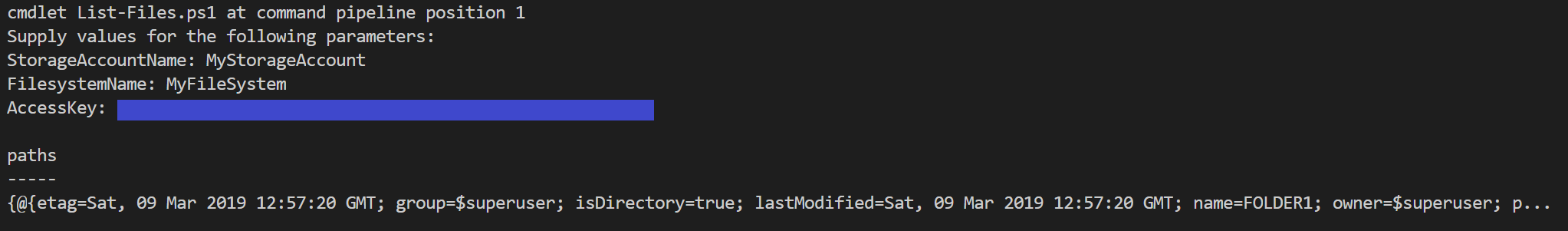

Result:

It works!

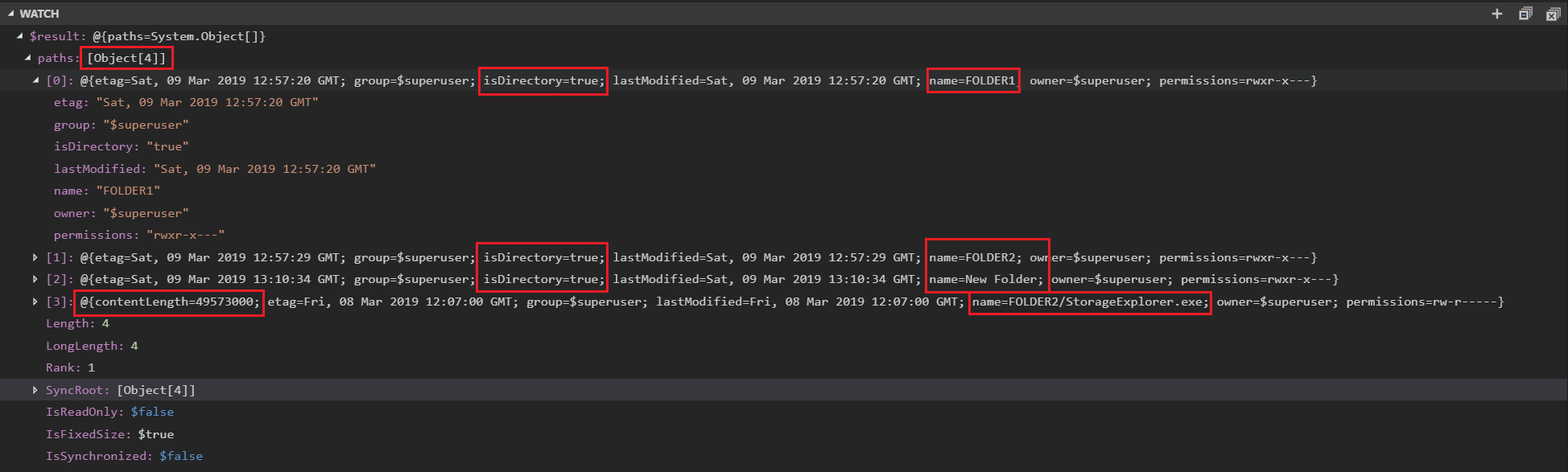

Now small debug in Visual Studio Code, adding a watch for $result variable to see a little more than in console output:

From here you can see, that recursive=true did the trick, and now I have a list of 4 objects. Three folders in root directory and one file in FOLDER2, sized 49MB. Just rememebr that API has a limit of 5000 objects.

Easy peasy, right? 😉 Head down to the example scripts and try it for yourself!

Example Scripts

List files

This example should list the content of your root folder in Azure Data Lake Storage Gen2, along with all subdirectories and all existing files recursively.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 |

[CmdletBinding()] Param( [Parameter(Mandatory=$true,Position=1)] [string] $StorageAccountName, [Parameter(Mandatory=$True,Position=2)] [string] $FilesystemName, [Parameter(Mandatory=$True,Position=3)] [string] $AccessKey ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/list $date = [System.DateTime]::UtcNow.ToString("R") $n = "`n" $method = "GET" # $stringToSign = "GET`n`n`n`n`n`n`n`n`n`n`n`n" $stringToSign = "$method$n" #VERB $stringToSign += "$n" # Content-Encoding + "\n" + $stringToSign += "$n" # Content-Language + "\n" + $stringToSign += "$n" # Content-Length + "\n" + $stringToSign += "$n" # Content-MD5 + "\n" + $stringToSign += "$n" # Content-Type + "\n" + $stringToSign += "$n" # Date + "\n" + $stringToSign += "$n" # If-Modified-Since + "\n" + $stringToSign += "$n" # If-Match + "\n" + $stringToSign += "$n" # If-None-Match + "\n" + $stringToSign += "$n" # If-Unmodified-Since + "\n" + $stringToSign += "$n" # Range + "\n" + $stringToSign += <# SECTION: CanonicalizedHeaders + "\n" #> "x-ms-date:$date" + $n + "x-ms-version:2018-11-09" + $n # <# SECTION: CanonicalizedHeaders + "\n" #> $stringToSign += <# SECTION: CanonicalizedResource + "\n" #> "/$StorageAccountName/$FilesystemName" + $n + "recursive:true" + $n + "resource:filesystem"# <# SECTION: CanonicalizedResource + "\n" #> $sharedKey = [System.Convert]::FromBase64String($AccessKey) $hasher = New-Object System.Security.Cryptography.HMACSHA256 $hasher.Key = $sharedKey $signedSignature = [System.Convert]::ToBase64String($hasher.ComputeHash([System.Text.Encoding]::UTF8.GetBytes($stringToSign))) $authHeader = "SharedKey ${StorageAccountName}:$signedSignature" $headers = @{"x-ms-date"=$date} $headers.Add("x-ms-version","2018-11-09") $headers.Add("Authorization",$authHeader) $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + "?recursive=true&resource=filesystem" $result = Invoke-RestMethod -method $method -Uri $URI -Headers $headers $result |

List files in directory, limit results, return recursive

This example should list the content of your requested folder in Azure Data Lake Storage Gen2. You can limit the number of returned results (up to 5000) and decide if files in folders should be returned recursively.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 |

[CmdletBinding()] Param( [Parameter(Mandatory=$true,Position=1)] [string] $StorageAccountName, [Parameter(Mandatory=$True,Position=2)] [string] $FilesystemName, [Parameter(Mandatory=$True,Position=3)] [string] $AccessKey, [Parameter(Mandatory=$True,Position=4)] [string] $Directory, # ex. "/", "/dir1/dir2" [Parameter(Mandatory=$False,Position=5)] [string] $MaxResults = "5000", # up to "5000" [Parameter(Mandatory=$False,Position=6)] [string] $Recursive = "false" # "true","false" ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/list $date = [System.DateTime]::UtcNow.ToString("R") $n = "`n" $method = "GET" # $stringToSign = "GET`n`n`n`n`n`n`n`n`n`n`n`n" $stringToSign = "$method$n" #VERB $stringToSign += "$n" # Content-Encoding + "\n" + $stringToSign += "$n" # Content-Language + "\n" + $stringToSign += "$n" # Content-Length + "\n" + $stringToSign += "$n" # Content-MD5 + "\n" + $stringToSign += "$n" # Content-Type + "\n" + $stringToSign += "$n" # Date + "\n" + $stringToSign += "$n" # If-Modified-Since + "\n" + $stringToSign += "$n" # If-Match + "\n" + $stringToSign += "$n" # If-None-Match + "\n" + $stringToSign += "$n" # If-Unmodified-Since + "\n" + $stringToSign += "$n" # Range + "\n" + $stringToSign += <# SECTION: CanonicalizedHeaders + "\n" #> "x-ms-date:$date" + $n + "x-ms-version:2018-11-09" + $n # <# SECTION: CanonicalizedHeaders + "\n" #> $stringToSign += <# SECTION: CanonicalizedResource + "\n" #> "/$StorageAccountName/$FilesystemName" + $n + "directory:" + $Directory + $n + "maxresults:" + $MaxResults + $n + "recursive:$Recursive" + $n + "resource:filesystem"# <# SECTION: CanonicalizedResource + "\n" #> $sharedKey = [System.Convert]::FromBase64String($AccessKey) $hasher = New-Object System.Security.Cryptography.HMACSHA256 $hasher.Key = $sharedKey $signedSignature = [System.Convert]::ToBase64String($hasher.ComputeHash([System.Text.Encoding]::UTF8.GetBytes($stringToSign))) $authHeader = "SharedKey ${StorageAccountName}:$signedSignature" $headers = @{"x-ms-date"=$date} $headers.Add("x-ms-version","2018-11-09") $headers.Add("Authorization",$authHeader) $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + "?directory=$Directory&maxresults=$MaxResults&recursive=$Recursive&resource=filesystem" $result = Invoke-RestMethod -method $method -Uri $URI -Headers $headers $result |

Create directory (or path of directories)

This example should create folders declared in PathToCreate variable.

Bear in mind, that creating a path here, means creating everything declared as a path. So there is no need to create FOLDER1 as first, then FOLDER1/SUBFOLDER1 as second. You can make them all at once just providing the full path "/FOLDER1/SUBFOLDER1/"

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 |

[CmdletBinding()] Param( [Parameter(Mandatory=$true,Position=1)] [string] $StorageAccountName, [Parameter(Mandatory=$True,Position=2)] [string] $FilesystemName, [Parameter(Mandatory=$True,Position=3)] [string] $AccessKey, [Parameter(Mandatory=$True,Position=4)] [string] $PathToCreate ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/create $PathToCreate = "/" + $PathToCreate.trim("/") # remove all "//path" or "path/" $date = [System.DateTime]::UtcNow.ToString("R") # ex: Sun, 10 Mar 2019 11:50:10 GMT $n = "`n" $method = "PUT" $stringToSign = "$method$n" #VERB $stringToSign += "$n" # Content-Encoding + "\n" + $stringToSign += "$n" # Content-Language + "\n" + $stringToSign += "$n" # Content-Length + "\n" + $stringToSign += "$n" # Content-MD5 + "\n" + $stringToSign += "$n" # Content-Type + "\n" + $stringToSign += "$n" # Date + "\n" + $stringToSign += "$n" # If-Modified-Since + "\n" + $stringToSign += "$n" # If-Match + "\n" + $stringToSign += "*" + "$n" # If-None-Match + "\n" + $stringToSign += "$n" # If-Unmodified-Since + "\n" + $stringToSign += "$n" # Range + "\n" + $stringToSign += <# SECTION: CanonicalizedHeaders + "\n" #> "x-ms-date:$date" + $n + "x-ms-version:2018-11-09" + $n # <# SECTION: CanonicalizedHeaders + "\n" #> $stringToSign += <# SECTION: CanonicalizedResource + "\n" #> "/$StorageAccountName/$FilesystemName" + $PathToCreate + $n + "resource:directory"# <# SECTION: CanonicalizedResource + "\n" #> $sharedKey = [System.Convert]::FromBase64String($AccessKey) $hasher = New-Object System.Security.Cryptography.HMACSHA256 $hasher.Key = $sharedKey $signedSignature = [System.Convert]::ToBase64String($hasher.ComputeHash([System.Text.Encoding]::UTF8.GetBytes($stringToSign))) $authHeader = "SharedKey ${StorageAccountName}:$signedSignature" $headers = @{"x-ms-date"=$date} $headers.Add("x-ms-version","2018-11-09") $headers.Add("Authorization",$authHeader) $headers.Add("If-None-Match","*") # To fail if the destination already exists, use a conditional request with If-None-Match: "*" $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + $PathToCreate + "?resource=directory" try { Invoke-RestMethod -method $method -Uri $URI -Headers $headers # returns empty response $true } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription $false Throw $ErrorMessage + " " + $StatusDescription } |

Rename file/folder (or move to the other path)

This example should rename given path to another, you can also move files with that command (hierarchical namespaces, path to file is just only metadata)

Example parameters:

|

1 2 3 4 5 |

$StorageAccountName = "mystorage" $FilesystemName = "my-filesystem" $AccessKey = "blahblah" $PathToRename = 'Catalog1/Catalog2' $RenameTo = 'Catalog1/Catalog2_renamed' |

And the most requested code 😀

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 |

[CmdletBinding()] Param( [Parameter(Mandatory = $true, Position = 1)] [string] $StorageAccountName, [Parameter(Mandatory = $True, Position = 2)] [string] $FilesystemName, [Parameter(Mandatory = $True, Position = 3)] [string] $AccessKey, [Parameter(Mandatory = $True, Position = 4)] [string] $PathToRename, [Parameter(Mandatory = $True, Position = 5)] [string] $RenameTo ) # Rest documentation - rename file or folder: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/create $PathToRename = "/" + $PathToRename.trim("/") # remove all "//path" or "path/" $RenameTo = "/" + $RenameTo.trim("/") $RenameTo_encoded = [System.Web.HTTPUtility]::UrlEncode($RenameTo) $PathToRename = "/$FilesystemName" + $PathToRename $PathToRename_encoded = [System.Web.HTTPUtility]::UrlEncode($PathToRename) $date = [System.DateTime]::UtcNow.ToString("R") # ex: Sun, 10 Mar 2019 11:50:10 GMT $n = "`n" $method = "PUT" $stringToSign = "$method$n" #VERB $stringToSign += "$n" # Content-Encoding + "\n" + $stringToSign += "$n" # Content-Language + "\n" + $stringToSign += "$n" # Content-Length + "\n" + $stringToSign += "$n" # Content-MD5 + "\n" + $stringToSign += "$n" # Content-Type + "\n" + $stringToSign += "$n" # Date + "\n" + $stringToSign += "$n" # If-Modified-Since + "\n" + $stringToSign += "$n" # If-Match + "\n" + $stringToSign += "$n" # If-None-Match + "\n" + $stringToSign += "$n" # If-Unmodified-Since + "\n" + $stringToSign += "$n" # Range + "\n" + $stringToSign += <# SECTION: CanonicalizedHeaders + "\n" #> "x-ms-date:$date" + $n + "x-ms-rename-source:$PathToRename_encoded" + $n + "x-ms-version:2018-11-09" + $n # <# SECTION: CanonicalizedHeaders + "\n" #> $stringToSign += <# SECTION: CanonicalizedResource + "\n" #> "/$StorageAccountName/$FilesystemName" + $RenameTo_encoded <# SECTION: CanonicalizedResource + "\n" #> $sharedKey = [System.Convert]::FromBase64String($AccessKey) $hasher = New-Object System.Security.Cryptography.HMACSHA256 $hasher.Key = $sharedKey $signedSignature = [System.Convert]::ToBase64String($hasher.ComputeHash([System.Text.Encoding]::UTF8.GetBytes($stringToSign))) $authHeader = "SharedKey ${StorageAccountName}:$signedSignature" $headers = @{"x-ms-date" = $date } $headers.Add("x-ms-version", "2018-11-09") $headers.Add("Authorization", $authHeader) $headers.Add("x-ms-rename-source", $PathToRename_encoded) $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + $RenameTo_encoded try { Invoke-RestMethod -method $method -Uri $URI -Headers $headers # returns empty response $true } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription $false Throw $ErrorMessage + " " + $StatusDescription } |

Delete folder or file (simple, without continuation)

This example should delete file or folder, giving you also an option to delete folder recursively.

Bear in mind, that this example uses simple delete without continuation. To learn what it is and how to handle it please refer section describing parameter “continuation” in the documentation here (click)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 |

[CmdletBinding()] Param( [Parameter(Mandatory=$true,Position=1)] [string] $StorageAccountName, [Parameter(Mandatory=$True,Position=2)] [string] $FilesystemName, [Parameter(Mandatory=$True,Position=3)] [string] $AccessKey, [Parameter(Mandatory=$True,Position=4)] [string] $PathToDelete, [Parameter(Mandatory=$false,Position=5)] [switch] $Recursive = $False ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/delete $PathToDelete = "/" + $PathToDelete.trim("/") # remove all "//path" or "path/" $date = [System.DateTime]::UtcNow.ToString("R") # ex: Sun, 10 Mar 2019 11:50:10 GMT if ($Recursive) {$Recursive_rest = 'true'} else {$Recursive_rest = 'false'} $n = "`n" $method = "DELETE" $stringToSign = "$method$n" #VERB $stringToSign += "$n" # Content-Encoding + "\n" + $stringToSign += "$n" # Content-Language + "\n" + $stringToSign += "$n" # Content-Length + "\n" + $stringToSign += "$n" # Content-MD5 + "\n" + $stringToSign += "$n" # Content-Type + "\n" + $stringToSign += "$n" # Date + "\n" + $stringToSign += "$n" # If-Modified-Since + "\n" + $stringToSign += "$n" # If-Match + "\n" + $stringToSign += "$n" # If-None-Match + "\n" + $stringToSign += "$n" # If-Unmodified-Since + "\n" + $stringToSign += "$n" # Range + "\n" + $stringToSign += <# SECTION: CanonicalizedHeaders + "\n" #> "x-ms-date:$date" + $n + "x-ms-version:2018-11-09" + $n # <# SECTION: CanonicalizedHeaders + "\n" #> $stringToSign += <# SECTION: CanonicalizedResource + "\n" #> "/$StorageAccountName/$FilesystemName" + $PathToDelete + $n + "recursive:$Recursive_rest"# <# SECTION: CanonicalizedResource + "\n" #> $sharedKey = [System.Convert]::FromBase64String($AccessKey) $hasher = New-Object System.Security.Cryptography.HMACSHA256 $hasher.Key = $sharedKey $signedSignature = [System.Convert]::ToBase64String($hasher.ComputeHash([System.Text.Encoding]::UTF8.GetBytes($stringToSign))) $authHeader = "SharedKey ${StorageAccountName}:$signedSignature" $headers = @{"x-ms-date"=$date} $headers.Add("x-ms-version","2018-11-09") $headers.Add("Authorization",$authHeader) $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + $PathToDelete + "?recursive=$Recursive_rest" try { Invoke-RestMethod -method $method -Uri $URI -Headers $headers # returns empty response $true } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription $false Throw $ErrorMessage + " " + $StatusDescription } |

Usage example:

|

1 |

./your_script.ps1 -StorageAccountName "storage_Acc_name" -FilesystemName "fsname" -AccessKey "key" -PathToDelete "folder1/folder2" -Recursive |

List filesystems

This example lists all available filesystems in your storage account. Refer to $result.filesystems to get the array of filesystem objects.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 |

[CmdletBinding()] Param( [Parameter(Mandatory=$true,Position=1)] [string] $StorageAccountName, [Parameter(Mandatory=$True,Position=2)] [string] $AccessKey ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/filesystem/list $date = [System.DateTime]::UtcNow.ToString("R") # ex: Sun, 10 Mar 2019 11:50:10 GMT $n = "`n" $method = "GET" $stringToSign = "$method$n" #VERB $stringToSign += "$n" # Content-Encoding + "\n" + $stringToSign += "$n" # Content-Language + "\n" + $stringToSign += "$n" # Content-Length + "\n" + $stringToSign += "$n" # Content-MD5 + "\n" + $stringToSign += "$n" # Content-Type + "\n" + $stringToSign += "$n" # Date + "\n" + $stringToSign += "$n" # If-Modified-Since + "\n" + $stringToSign += "$n" # If-Match + "\n" + $stringToSign += "$n" # If-None-Match + "\n" + $stringToSign += "$n" # If-Unmodified-Since + "\n" + $stringToSign += "$n" # Range + "\n" + $stringToSign += <# SECTION: CanonicalizedHeaders + "\n" #> "x-ms-date:$date" + $n + "x-ms-version:2018-11-09" + $n # <# SECTION: CanonicalizedHeaders + "\n" #> $stringToSign += <# SECTION: CanonicalizedResource + "\n" #> "/$StorageAccountName/" + $n + "resource:account" <# SECTION: CanonicalizedResource + "\n" #> $sharedKey = [System.Convert]::FromBase64String($AccessKey) $hasher = New-Object System.Security.Cryptography.HMACSHA256 $hasher.Key = $sharedKey $signedSignature = [System.Convert]::ToBase64String($hasher.ComputeHash([System.Text.Encoding]::UTF8.GetBytes($stringToSign))) $authHeader = "SharedKey ${StorageAccountName}:$signedSignature" $headers = @{"x-ms-date"=$date} $headers.Add("x-ms-version","2018-11-09") $headers.Add("Authorization",$authHeader) $URI = "https://$StorageAccountName.dfs.core.windows.net/" + "?resource=account" $result = Invoke-RestMethod -method $method -Uri $URI -Headers $headers $result |

Create filesystem

This example should create filesystem provided in FilesystemName parameter. Remember, that filesystem names cannot have big letters or special chars!

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 |

[CmdletBinding()] Param( [Parameter(Mandatory=$true,Position=1)] [string] $StorageAccountName, [Parameter(Mandatory=$True,Position=2)] [string] $FilesystemName, [Parameter(Mandatory=$True,Position=3)] [string] $AccessKey ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/filesystem/create $date = [System.DateTime]::UtcNow.ToString("R") # ex: Sun, 10 Mar 2019 11:50:10 GMT $n = "`n" $method = "PUT" $stringToSign = "$method$n" #VERB $stringToSign += "$n" # Content-Encoding + "\n" + $stringToSign += "$n" # Content-Language + "\n" + $stringToSign += "$n" # Content-Length + "\n" + $stringToSign += "$n" # Content-MD5 + "\n" + $stringToSign += "$n" # Content-Type + "\n" + $stringToSign += "$n" # Date + "\n" + $stringToSign += "$n" # If-Modified-Since + "\n" + $stringToSign += "$n" # If-Match + "\n" + $stringToSign += "$n" # If-None-Match + "\n" + $stringToSign += "$n" # If-Unmodified-Since + "\n" + $stringToSign += "$n" # Range + "\n" + $stringToSign += <# SECTION: CanonicalizedHeaders + "\n" #> "x-ms-date:$date" + $n + "x-ms-version:2018-11-09" + $n # <# SECTION: CanonicalizedHeaders + "\n" #> $stringToSign += <# SECTION: CanonicalizedResource + "\n" #> "/$StorageAccountName/$FilesystemName" + $n + "resource:filesystem"# <# SECTION: CanonicalizedResource + "\n" #> $sharedKey = [System.Convert]::FromBase64String($AccessKey) $hasher = New-Object System.Security.Cryptography.HMACSHA256 $hasher.Key = $sharedKey $signedSignature = [System.Convert]::ToBase64String($hasher.ComputeHash([System.Text.Encoding]::UTF8.GetBytes($stringToSign))) $authHeader = "SharedKey ${StorageAccountName}:$signedSignature" $headers = @{"x-ms-date"=$date} $headers.Add("x-ms-version","2018-11-09") $headers.Add("Authorization",$authHeader) $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + "?resource=filesystem" Try { Invoke-RestMethod -method $method -Uri $URI -Headers $headers # returns empty response } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription $false Throw $ErrorMessage + " " + $StatusDescription } |

Get permissions from filesystem or path

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 |

[CmdletBinding()] Param( [Parameter(Mandatory=$true,Position=1)] [string] $StorageAccountName, [Parameter(Mandatory=$True,Position=2)] [string] $AccessKey, [Parameter(Mandatory=$True,Position=3)] [string] $FilesystemName, [Parameter(Mandatory=$True,Position=4)] [string] $Path ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/getproperties $date = [System.DateTime]::UtcNow.ToString("R") # ex: Sun, 10 Mar 2019 11:50:10 GMT $n = "`n" $method = "HEAD" $stringToSign = "$method$n" #VERB $stringToSign += "$n" # Content-Encoding + "\n" + $stringToSign += "$n" # Content-Language + "\n" + $stringToSign += "$n" # Content-Length + "\n" + $stringToSign += "$n" # Content-MD5 + "\n" + $stringToSign += "$n" # Content-Type + "\n" + $stringToSign += "$n" # Date + "\n" + $stringToSign += "$n" # If-Modified-Since + "\n" + $stringToSign += "$n" # If-Match + "\n" + $stringToSign += "$n" # If-None-Match + "\n" + $stringToSign += "$n" # If-Unmodified-Since + "\n" + $stringToSign += "$n" # Range + "\n" + $stringToSign += <# SECTION: CanonicalizedHeaders + "\n" #> "x-ms-date:$date" + $n + "x-ms-version:2018-11-09" + $n # <# SECTION: CanonicalizedHeaders + "\n" #> $stringToSign += <# SECTION: CanonicalizedResource + "\n" #> "/$StorageAccountName/$FilesystemName/$Path" + $n + "action:getAccessControl" + $n + "upn:true"# <# SECTION: CanonicalizedResource + "\n" #> $sharedKey = [System.Convert]::FromBase64String($AccessKey) $hasher = New-Object System.Security.Cryptography.HMACSHA256 $hasher.Key = $sharedKey $signedSignature = [System.Convert]::ToBase64String($hasher.ComputeHash([System.Text.Encoding]::UTF8.GetBytes($stringToSign))) $authHeader = "SharedKey ${StorageAccountName}:$signedSignature" $headers = @{"x-ms-date"=$date} $headers.Add("x-ms-version","2018-11-09") $headers.Add("Authorization",$authHeader) $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + "/" + $Path + "?action=getAccessControl&upn=true" $result = Invoke-WebRequest -method $method -Uri $URI -Headers $headers $result.Headers.'x-ms-acl' |

Set permissions on filesystem or path

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 |

[CmdletBinding()] Param( [Parameter(Mandatory=$true,Position=1)] [string] $StorageAccountName, [Parameter(Mandatory=$True,Position=2)] [string] $AccessKey, [Parameter(Mandatory=$True,Position=3)] [string] $FilesystemName, [Parameter(Mandatory=$True,Position=4)] [string] $Path, [Parameter(Mandatory=$True,Position=5)] [string] $PermissionString ) # Rest documentation: # https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/update $date = [System.DateTime]::UtcNow.ToString("R") # ex: Sun, 10 Mar 2019 11:50:10 GMT $n = "`n" $method = "PATCH" $stringToSign = "$method$n" #VERB $stringToSign += "$n" # Content-Encoding + "\n" + $stringToSign += "$n" # Content-Language + "\n" + $stringToSign += "$n" # Content-Length + "\n" + $stringToSign += "$n" # Content-MD5 + "\n" + $stringToSign += "$n" # Content-Type + "\n" + $stringToSign += "$n" # Date + "\n" + $stringToSign += "$n" # If-Modified-Since + "\n" + $stringToSign += "$n" # If-Match + "\n" + $stringToSign += "$n" # If-None-Match + "\n" + $stringToSign += "$n" # If-Unmodified-Since + "\n" + $stringToSign += "$n" # Range + "\n" + $stringToSign += <# SECTION: CanonicalizedHeaders + "\n" #> "x-ms-acl:$PermissionString" + $n + "x-ms-date:$date" + $n + "x-ms-version:2018-11-09" + $n # <# SECTION: CanonicalizedHeaders + "\n" #> $stringToSign += <# SECTION: CanonicalizedResource + "\n" #> "/$StorageAccountName/$FilesystemName/$Path" + $n + "action:setAccessControl" <# SECTION: CanonicalizedResource + "\n" #> $sharedKey = [System.Convert]::FromBase64String($AccessKey) $hasher = New-Object System.Security.Cryptography.HMACSHA256 $hasher.Key = $sharedKey $signedSignature = [System.Convert]::ToBase64String($hasher.ComputeHash([System.Text.Encoding]::UTF8.GetBytes($stringToSign))) $authHeader = "SharedKey ${StorageAccountName}:$signedSignature" $headers = @{"x-ms-date"=$date} $headers.Add("x-ms-version","2018-11-09") $headers.Add("Authorization",$authHeader) $headers.Add("x-ms-acl",$PermissionString) $URI = "https://$StorageAccountName.dfs.core.windows.net/" + $FilesystemName + "/" + $Path + "?action=setAccessControl" Try { Invoke-RestMethod -method $method -Uri $URI -Headers $headers # returns empty response $true } catch { $ErrorMessage = $_.Exception.Message $StatusDescription = $_.Exception.Response.StatusDescription $false Throw $ErrorMessage + " " + $StatusDescription } |

Good luck!

this was brilliant. Thank you so much.

In case anyone is wondering how to not get an error if an item exists (took me some time to figure out): uncomment $stringToSign += “*” + “$n” # If-None-Match + “\n” + and remove it (or uncomment) from the addHeader part

Hi Michael,

This is really a very nice post. I still need to implement what you have listed in this post though. While searching online I came up across this page https://docs.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-directory-file-acl-powershell so it looks like Microsoft is releasing a new module to make things easier.

Hi Sirini.

Thanks. Yes, I’m aware of new things since MPA (Multi Protocol Access) is now in general availability.

To be honest, In my opinion they (Micorosoft, but also other vendors, developers, companies) were waiting for MPA availability since the biggining of ADLS Gen2… They did not release anything and I can say that I understand that. Why should I develop a new branch of my code, just to align it with new protocol specification (ADLS Gen2 REST API) when there is a confirmed feature from Microsoft that will bring a 100% compatibility using BLOB API?

So now, after almost a year, you can just use BLOB API connector, for example in PowerBI and use it to connect to ADLS Gen2. Pretty cool, but a year to late..

Anyway, I need to update my article and I will do it soon.

Thanks.

Hi Michael,

Thank you for the post and it is what I needed. I used your LIST directories script and it worked perfectly fine from my desktop (Powershell ISE), then I copied and pasted the same code in Azure Automation Runbook and unfortunately it fails with following error:-

Invoke-RestMethod : {“error”:{“code”:”AuthorizationFailure”,”message”:”This request is not authorized to perform this

operation.\nRequestId:0c0b4062-901f-0032-2630-ef9d2f000000\nTime:2020-02-29T18:46:36.9060927Z”}}

I have used the same code from my desktop and it works absolutely fine. Following is the code I used ( I copied the AccessKey from the Keys on the storage account Key1):-

# Storage account name

$StorageAccountName = “XXLLLSSS”

$FilesystemName =”

$logs"$AccessKey = "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXekG6f6J0iNstoZLJghXg=="

$Directory ="queue/2020/02/29"

$MaxResults = "5000"

$Recursive = "true"

# Rest documentation:

# https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/list

$date = [System.DateTime]::UtcNow.ToString("R")

$n = "n”

$method = “GET”

# $stringToSign = “GET

nnnnnnnnnnnn”$stringToSign = “$method$n” #VERB

$stringToSign += “$n” # Content-Encoding + “\n” +

$stringToSign += “$n” # Content-Language + “\n” +

$stringToSign += “$n” # Content-Length + “\n” +

$stringToSign += “$n” # Content-MD5 + “\n” +

$stringToSign += “$n” # Content-Type + “\n” +

$stringToSign += “$n” # Date + “\n” +

$stringToSign += “$n” # If-Modified-Since + “\n” +

$stringToSign += “$n” # If-Match + “\n” +

$stringToSign += “$n” # If-None-Match + “\n” +

$stringToSign += “$n” # If-Unmodified-Since + “\n” +

$stringToSign += “$n” # Range + “\n” +

$stringToSign +=

“x-ms-date:$date” + $n +

“x-ms-version:2018-11-09” + $n #

$stringToSign +=

“/$StorageAccountName/$FilesystemName” + $n +

“directory:” + $Directory + $n +

“maxresults:” + $MaxResults + $n +

“recursive:$Recursive” + $n +

“resource:filesystem”#

$sharedKey = [System.Convert]::FromBase64String($AccessKey)

$hasher = New-Object System.Security.Cryptography.HMACSHA256

$hasher.Key = $sharedKey

$signedSignature = [System.Convert]::ToBase64String($hasher.ComputeHash([System.Text.Encoding]::UTF8.GetBytes($stringToSign)))

$authHeader = “SharedKey ${StorageAccountName}:$signedSignature”

$headers = @{“x-ms-date”=$date}

$headers.Add(“x-ms-version”,”2018-11-09″)

$headers.Add(“Authorization”,$authHeader)

$URI = “https://$StorageAccountName.dfs.core.windows.net/” + $FilesystemName + “?directory=$Directory&maxresults=$MaxResults&recursive=$Recursive&resource=filesystem”

$result = Invoke-RestMethod -method $method -Uri $URI -Headers $headers

$result

Sounds like a firewall issue. Are you sure that your ADLS does not have vnet and firewall filtering enabled? When this kind of filtering is enabled your ADLS can return ‘this request is not authorised’. It is a PaaS service so it does not prevent you from accessing the API, but you always will get this error message until you allow your (or Automation) public IP or allow trusted ms services (not aure how Automation is handled here)

Perfect sir, I just resolved that issue and I saw your email. That was the case on Storage Account (Firewall/Network Settings were set in such a way to not allow all networks but selected networks). Thank you again for your prompt response.

Cool 🙂 , I’m glad it worked out. Good luck!

Hi Michal,

I am trying to rename a file using ADLGen2 Rest API and can’t seem to figure out the mistake. I am trying it on fiddler and it always throws 400 error when i use

x-ms-rename-source : in header section

if remove it and run, it creates file successfully

Put

https://{storageaccountname.dfs.core.windows.net}/filesystemname/filename?resource=file

Header –

Authorization: Bearer {AccessToken}

Host: {storageaccountname}.dfs.core.windows.net

Content-Length: 0

Content-type: application/json

Hmm, I didn’t use fiddler, does it work with powershell code?

There is already a working “rename” script in the article.

Adding or removing x-ms-rename-source in header section is not the only thing you need to do. There is also a requirement to calculate proper signature for canonicalized headers.

If you will decide to remove them from headers, you need to remove them from signature too and recalculate Base64 signature.

Hi Michal, thanks for posting example script. they are very useful to understand ADLSgen2.

I am using one of your scripts – 3.8 Get permissions from filesystem or path.

I am successfully able to run and get permissions for particular FilesystemName and path.

I want to get permission for all the FilesystemName and all the directories, folder and files inside the Storage Accounts ADLS gen2. Can you please suggest what modification required in your example script. Please let me know if it is possible by REST API.

For e.g.

project-

project1- file1.txt

project1.1 – file.txt

project2- file1.txt

project1.1 – file.txt

project3- file1.txt

project1.1 – file.txt

raw

project1- file1.txt

project1.1 – file.txt

project2- file1.txt

project1.1 – file.txt

project3- file1.txt

project1.1 – file.txt

Project and raw are FilesystemName. project1 is path and inside it is file and folders. Need to get acl of all them

You help would be much appreciated

Hi Adam.

Well, it will not be a one-step-solution to make this possible. You need to create an algorithm to properly get all information in the desired order:

1. Get all filesystems and iterate over them

https://docs.microsoft.com/pl-pl/rest/api/storageservices/datalakestoragegen2/filesystem/list

2. As a nested step: Get properties of a filesystem

https://docs.microsoft.com/pl-pl/rest/api/storageservices/datalakestoragegen2/filesystem/getproperties

3. Then Iterate over a root path listed objects, possibly retrieved with a “recursive” set to true, to get the list of all items… BUT it depends on your needs (can be huge in a large DL). Just remember that the list is limited to 5000 items and you need to implement and use “continuation” parameter

https://docs.microsoft.com/pl-pl/rest/api/storageservices/datalakestoragegen2/filesystem/list

4. As a nested iterator you need to traverse your path and for every particular item, directory or file, get properties using

https://docs.microsoft.com/en-us/rest/api/storageservices/datalakestoragegen2/path/getproperties

I know this sounds a lot of requests to send and retrieve, but this is how atomic nature looks for REST API 😐 You cannot query for a bucket of objects in most scenarios, you need to fire them sequentially OR implement as multithreaded operation if youre using PowerShell 7.0 (so to run REST API calls for a bunch of items in parallel, see ForEach-Object -Parallel cmdlet)

Thanks for your response Michał ,

for Get permissions from filesystem or path sample script.

i try to export the output in the CSV format.

$result.Headers.’x-ms-acl’ | export-csv \home\adam\permi.csv

Output result

Length 204

can you suggest what i need to change to output to CSV

Hi Adam.

Export-Csv or ConvertTo-Csv operates on a different level of variable type.

When you handle $result.Headers.’x-ms-acl’ and try to output it, then it will be parsed as a string anywhere that expects that value as a string. But in fact $result.Headers.’x-ms-acl’ can be also converted easily to an object and CSV cmdlets expect the input to be an object. Therefore, there is an implicit conversion from string to object, where object properties are also converted (like the length of the string)

Ok, that’s for the reasons why it worked like this.

Now to handle it properly, I suggest to wrap your expected schema and values into PSObject and return it to CSV cmdlet, like this:

$wrap = New-Object PSObject -Property @{ ‘Filesystem’ = $FilesystemName; ‘x-ms-acl’ = $result.Headers.’x-ms-acl’; ‘x-ms-permissions’ = $result.Headers.’x-ms-permissions’ }

$wrap | ConvertTo-Csv -NoTypeInformation

regards,

m.

Tysm for putting this document together. It is really helpful 🙂 I have 2 queries

1. How to modify the last rest API to revoke the access from file system?

2. Instead of storage account keys, if we want to use SAS token for authentication, how can we do that?